-

Table of Contents

- Uncovering the Hidden Energy Costs of AI

- The Energy Demands of AI

- Training Large Language Models

- Inference and Deployment

- Case Studies: AI’s Energy Footprint in Action

- Case Study 1: DeepMind’s AlphaFold

- Case Study 2: Facebook’s AI Infrastructure

- The Opacity of AI Energy Use

- Lack of Transparency

- Proprietary Models and Competitive Pressures

- Regulatory and Policy Challenges

- Who Should Regulate AI?

- What Should Be Regulated?

- Toward Sustainable AI

- Green AI Initiatives

- Academic and Industry Collaboration

- Conclusion: A Call for Responsible Innovation

Uncovering the Hidden Energy Costs of AI

As artificial intelligence (AI) continues to revolutionize industries, reshape economies, and redefine the boundaries of human-machine interaction, a less visible but increasingly critical issue is emerging: the energy consumption of AI systems. While AI promises efficiency and innovation, it also demands vast computational resources, which translate into significant energy use. This hidden cost has implications not only for sustainability but also for policy, governance, and global equity. As artificial intelligence transforms our computing systems, economies, and energy footprints, the question of who should regulate artificial intelligence and how is a pressing – and incredibly complex – issue.

The Energy Demands of AI

Training Large Language Models

One of the most energy-intensive aspects of AI is the training of large language models (LLMs) such as OpenAI’s GPT-4 or Google’s PaLM. These models require massive datasets and extensive computational power to learn patterns and generate human-like text. According to a 2019 study by the University of Massachusetts Amherst, training a single large AI model can emit as much carbon as five cars over their lifetimes, including fuel consumption. Since then, models have grown exponentially in size and complexity.

For example, GPT-3, with 175 billion parameters, required an estimated 1,287 MWh of electricity to train, resulting in over 550 metric tons of carbon dioxide emissions. GPT-4, though its exact size and training data remain undisclosed, is believed to have required even more resources. These figures highlight the environmental cost of developing cutting-edge AI technologies.

Inference and Deployment

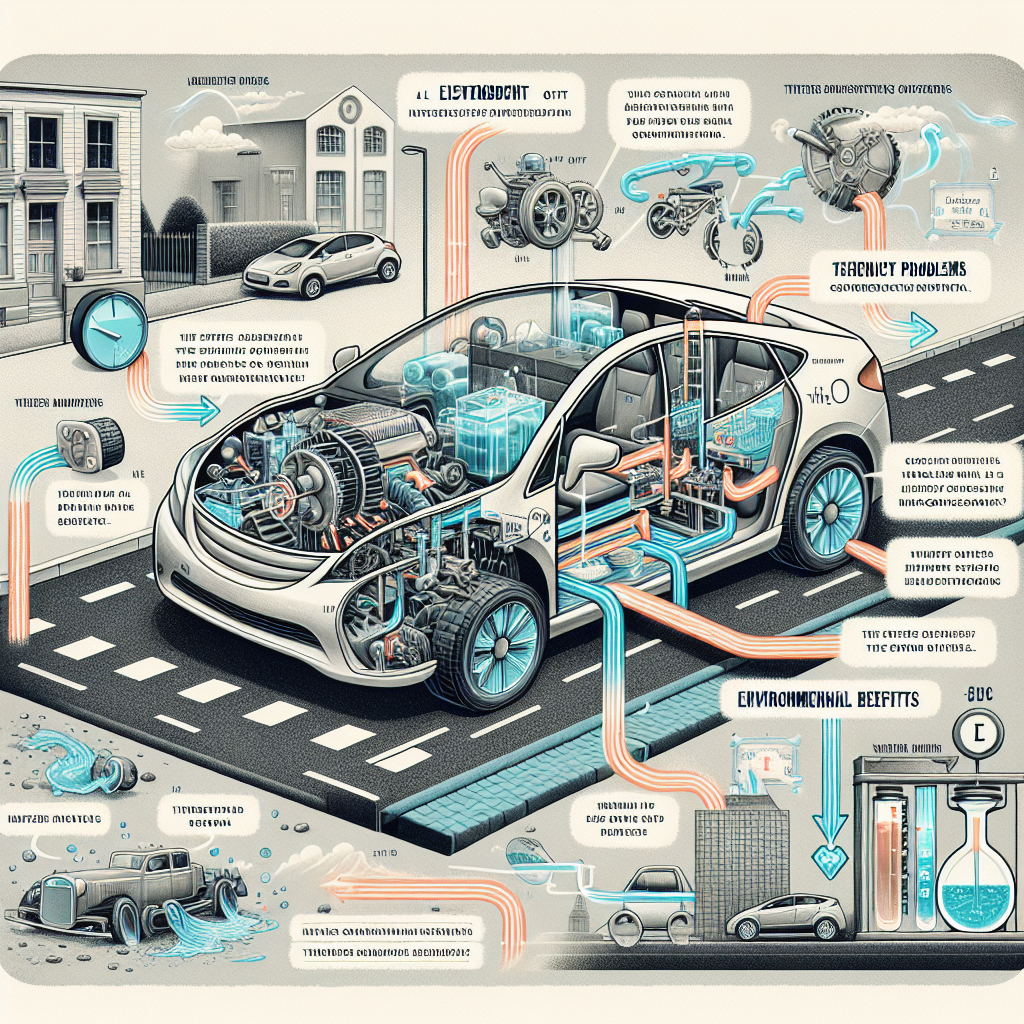

While training is energy-intensive, inference—the process of using a trained model to make predictions or generate content—also consumes significant energy, especially when deployed at scale. AI-powered services like real-time translation, recommendation engines, and chatbots operate continuously, often across millions of devices and servers.

According to a 2021 report by the International Energy Agency (IEA), data centers already account for about 1% of global electricity demand, and this figure is expected to rise as AI adoption increases. The proliferation of AI in consumer applications, from voice assistants to autonomous vehicles, further compounds the energy burden.

Case Studies: AI’s Energy Footprint in Action

Case Study 1: DeepMind’s AlphaFold

DeepMind’s AlphaFold, which solved the decades-old problem of protein folding, is a landmark achievement in AI. However, the computational cost was immense. The training of AlphaFold2 reportedly required hundreds of GPUs running for weeks. While the scientific benefits are undeniable, the energy cost raises questions about the sustainability of such breakthroughs.

Case Study 2: Facebook’s AI Infrastructure

Meta (formerly Facebook) has invested heavily in AI to power its content moderation, recommendation algorithms, and virtual reality initiatives. In 2020, Meta announced plans to build new data centers powered by renewable energy. However, the sheer scale of its AI operations—processing billions of user interactions daily—means that even with green energy, the environmental impact remains substantial.

The Opacity of AI Energy Use

Lack of Transparency

One of the major challenges in assessing AI’s energy impact is the lack of transparency. Companies often do not disclose the energy consumption or carbon footprint of their AI models. This opacity makes it difficult for regulators, researchers, and the public to understand the true environmental cost of AI technologies.

As one MIT Technology Review article noted, “The secrecy around training data, model size, and energy use makes it nearly impossible to hold companies accountable or to compare models on sustainability metrics.” This lack of standardization and openness hinders efforts to create more energy-efficient AI systems.

Proprietary Models and Competitive Pressures

AI development is highly competitive, with companies racing to release more powerful models. This competition incentivizes secrecy, as firms are reluctant to reveal details that could give rivals an edge. As a result, even academic researchers often struggle to access the data needed to evaluate energy use and environmental impact.

Regulatory and Policy Challenges

Who Should Regulate AI?

The question of who should regulate AI is currently the subject of intense debate. In the United States, a political battle is unfolding in Washington over which agencies should oversee the sector. The Federal Trade Commission (FTC), the National Institute of Standards and Technology (NIST), and even the Department of Energy have all expressed interest in AI governance.

Globally, the European Union has taken a more proactive stance with its proposed AI Act, which includes provisions for transparency, accountability, and risk assessment. However, energy consumption is not yet a central focus of most regulatory frameworks.

What Should Be Regulated?

Determining what aspects of AI to regulate is equally complex. Should governments mandate energy efficiency standards for AI models? Should companies be required to disclose the carbon footprint of their AI systems? Should there be limits on the size or training duration of models?

These questions are difficult to answer, especially given the rapid pace of AI development and the global nature of the industry. Moreover, regulation must balance innovation with sustainability, ensuring that environmental concerns do not stifle technological progress.

Toward Sustainable AI

Green AI Initiatives

In response to growing concerns, some researchers and organizations are advocating for “Green AI”—a movement focused on reducing the environmental impact of artificial intelligence. This includes developing more efficient algorithms, using renewable energy for data centers, and promoting transparency in energy reporting.

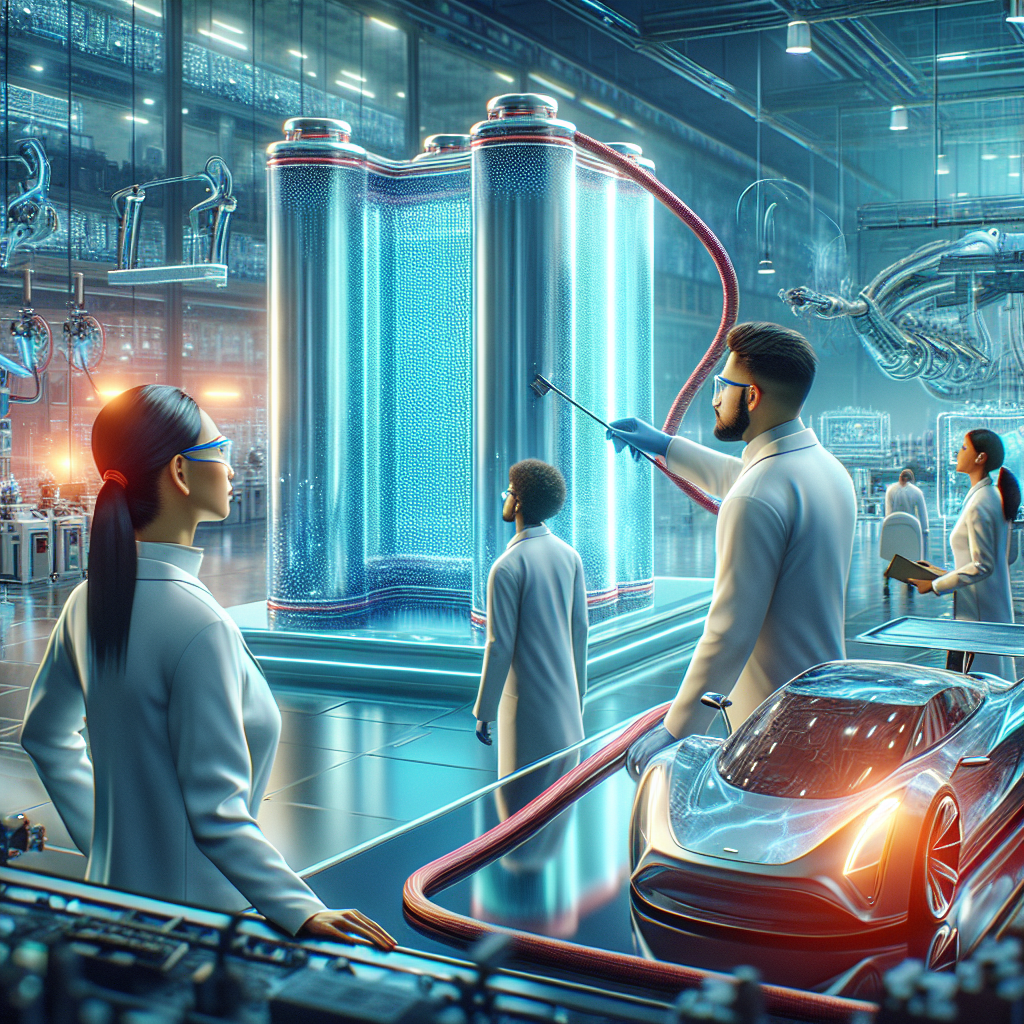

- Efficient Algorithms: Techniques like model pruning, quantization, and knowledge distillation can reduce the size and energy requirements of AI models without significantly compromising performance.

- Renewable Energy: Companies like Google and Microsoft have committed to powering their data centers with 100% renewable energy, though implementation varies by region.

- Energy Reporting Standards: Initiatives like the ML CO2 Impact calculator aim to standardize how researchers report the energy use and emissions of their models.

Academic and Industry Collaboration

Collaboration between academia, industry, and government is essential to address the energy challenges of AI. Joint research initiatives can help develop benchmarks for energy efficiency, while public-private partnerships can fund the development of sustainable AI infrastructure.

Conclusion: A Call for Responsible Innovation

Artificial intelligence holds immense promise, from revolutionizing healthcare to combating climate change. Yet, as we embrace these technologies, we must also confront their hidden costs. The energy demands of AI are not just a technical issue—they are a societal challenge that intersects with environmental sustainability, economic equity, and ethical governance.

Answering these questions will be